飞桨常规赛:PALM眼底彩照中黄斑中央凹定位-9月第1名方案

(1)比赛介绍

赛题介绍

榜首个人主页,戳此处查看

PALM黄斑定位常规赛的重点是研究和发展与患者眼底照片黄斑结构定位相关的算法。该常规赛的目标是评估和比较在一个常见的视网膜眼底图像数据集上定位黄斑的自动算法。具体目的是预测黄斑中央凹在图像中的坐标值。

中央凹是视网膜中辨色力、分辨力最敏锐的区域。以人为例,在视盘颞侧约3.5mm处,有一黄色小区,称黄斑,其中央的凹陷,就是中央凹。中央凹的准确定位可辅助医生完成糖尿病视网膜、黄斑变性等病变的诊断。

赛程赛制

(1)飞桨常规赛面向全社会公开报名,直至赛题下线;

(2)飞桨常规赛不设初赛、复赛,以当月每位参赛选手提交的最优成绩排名。每月竞赛周期为本月 1 日至本月最后 1 日;

(3)比赛期间选手每天最多可提交 5 次作品(预测结果+原始代码),系统自动选取最高成绩作为榜单记录;

(4)每个月 1-5 日公布上一个月总榜。当月排名前10 且通过代码复查的选手可获得由百度飞桨颁发的荣誉证书。对于初次上榜的参赛选手,还可额外获得1份特别礼包(1个飞桨周边奖品+ 100小时GPU算力卡)。工作人员将以邮件形式通知上一月排名前10的选手提交材料供代码复查,请各位参赛选手留意邮箱通知。特别提醒: 已获得过特别礼包的参赛选手,如果基于本赛题撰写新的studio项目并被评为精选,才可再次获得1份特别礼包;

(5) score超过0.04的第一位选手可额外获得大奖:小度在家;

(6) 鼓励选手报名多个主题的飞桨常规赛,以赛促学,全方面提升开发者的深度学习能力。

(2)数据介绍

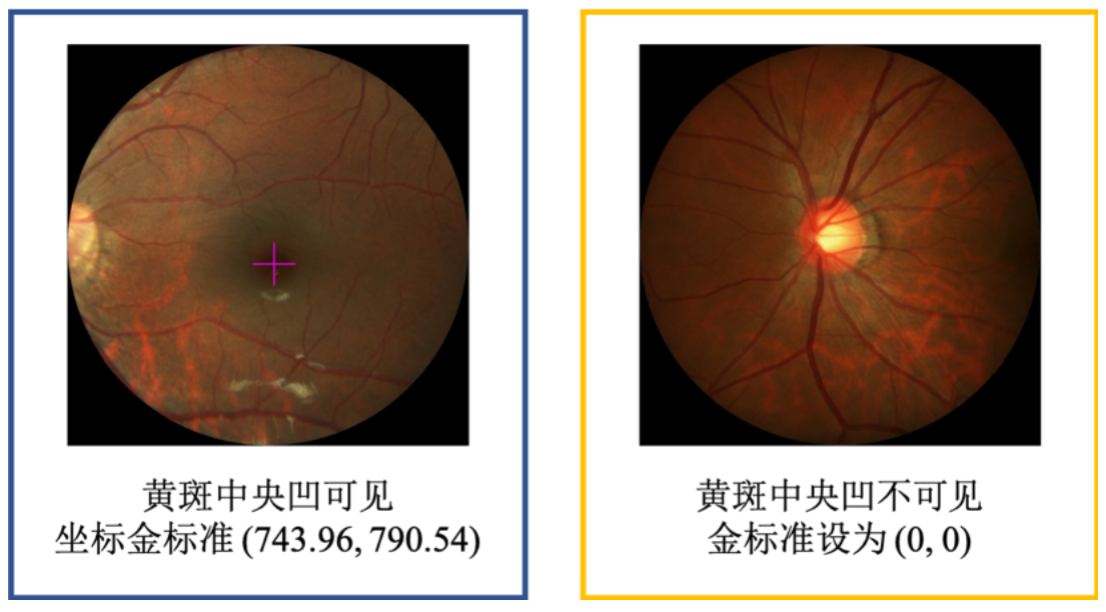

PALM病理性近视预测常规赛由中山大学中山眼科中心提供800张带黄斑中央凹坐标标注的眼底彩照供选手训练模型,另提供400张带标注数据供平台进行模型测试。

数据说明

本次常规赛提供的金标准由中山大学中山眼科中心的7名眼科医生手工进行标注,之后由另一位高级专家将它们融合为最终的标注结果。本比赛提供数据集对应的黄斑中央凹坐标信息存储在xlsx文件中,名为“Fovea_Location_train”,第一列对应眼底图像的文件名(包括扩展名“.jpg”),第二列包含x坐标,第三列包含y坐标。

图

训练数据集

文件名称:Train

Train文件夹里有一个文件夹fundus_images和一个xlsx文件。

fundus_images文件夹内包含800张眼底彩照,分辨率为1444×1444,或2124×2056。命名形如H0001.jpg、P0001.jpg、N0001.jpg和V0001.jpg。

xlsx文件中包含800张眼底彩照对应的x、y坐标信息。

测试数据集

文件名称:PALM-Testing400-Images 文件夹里包含400张眼底彩照,命名形如T0001.jpg。

(3)个人思路+个人方案亮点

自定义数据集读取图片和标签

class dataset(paddle.io.Dataset):

def __init__(self,img_list,label_listx,label_listy,transform=None,transform2=None,mode='train'):

self.image=img_list

self.labelx=label_listx

self.labely=label_listy

self.mode=mode

self.transform=transform

self.transform2=transform2

def load_img(self, image_path):

img=cv2.imread(image_path,1)

img=cv2.cvtColor(img,cv2.COLOR_BGR2RGB)

return img

def __getitem__(self,index):

img = self.load_img(self.image[index])

labelx = self.labelx[index]

labely = self.labely[index]

img_size=img.shape

label=np.array([labelx,labely])

img=np.array(img,dtype='float32')

label=np.array(label,dtype='float32')

if self.transform:

if self.mode=='train':

img, label = self.transform([img,label])

else:

img, label = self.transform2([img, label])

img=np.array(img,dtype='float32')

label=np.array(label,dtype='float32')

return img,label

def __len__(self):

return len(self.image)

使用数据增强方法对数据进行增广

class Resize(object):

# 将输入图像调整为指定大小

def __init__(self, output_size):

assert isinstance(output_size, (int, tuple))

self.output_size = output_size

def __call__(self, data):

image = data[0] # 获取图片

key_pts = data[1] # 获取标签

image_copy = np.copy(image)

key_pts_copy = np.copy(key_pts)

h, w = image_copy.shape[:2]

new_h, new_w = self.output_size,self.output_size

new_h, new_w = int(new_h), int(new_w)

img = F.resize(image_copy, (new_h, new_w))

# scale the pts, too

#key_pts_copy[::2] = key_pts_copy[::2] * new_w / w

#key_pts_copy[1::2] = key_pts_copy[1::2] * new_h / h

return img, key_pts_copy

class GrayNormalize(object):

# 将图片变为灰度图,并将其值放缩到[0, 1]

# 将 label 放缩到 [-1, 1] 之间

def __call__(self, data):

image = data[0] # 获取图片

key_pts = data[1] # 获取标签

image_copy = np.copy(image)

key_pts_copy = np.copy(key_pts)

# 灰度化图片

gray_scale = paddle.vision.transforms.Grayscale(num_output_channels=3)

image_copy = gray_scale(image_copy)

# 将图片值放缩到 [0, 1]

image_copy = (image_copy-127.5) / 127.5

# 将坐标点放缩到 [-1, 1]

#mean = data_mean # 获取标签均值

#std = data_std # 获取标签标准差

#key_pts_copy = (key_pts_copy - mean)/std

return image_copy, key_pts_copy

class ToCHW(object):

# 将图像的格式由HWC改为CHW

def __call__(self, data):

image = data[0]

key_pts = data[1]

transpose = T.Transpose((2, 0, 1)) # 改为CHW

image = transpose(image)

return image, key_pts

异步加载数据

train_loader = paddle.io.DataLoader(train_ds, places=paddle.CPUPlace(), batch_size=32, shuffle=True, num_workers=0)

val_loader = paddle.io.DataLoader(val_ds, places=paddle.CPUPlace(), batch_size=32, shuffle=True, num_workers=0)

test_loader=paddle.io.DataLoader(test_ds, places=paddle.CPUPlace(), batch_size=32, shuffle=False, num_workers=0)

使用预训练模型进行训练

如:resnet50、resnet101、resnet152、mobilenet_v1、mobilenet_v2等

self.net=paddle.vision.resnet152(pretrained=True)

使用 cosine annealing 的策略来动态调整学习率

lr = paddle.optimizer.lr.CosineAnnealingDecay(learning_rate=1e-4,T_max=64)

尝试了新的网络结构:

class MyNet(paddle.nn.Layer):

def __init__(self):

super(MyNet, self).__init__()

self.resnet = paddle.vision.resnet50(pretrained=True, num_classes=0) # remove final fc 输出为[?, 2048, 1, 1]

self.flatten = paddle.nn.Flatten()

self.linear_1 = paddle.nn.Linear(2048, 512)

self.linear_2 = paddle.nn.Linear(512, 256)

self.linear_3 = paddle.nn.Linear(256, 2)

self.relu = paddle.nn.ReLU()

self.dropout = paddle.nn.Dropout(0.2)

def forward(self, inputs):

# print('input', inputs)

y = self.resnet(inputs)

y = self.flatten(y)

y = self.linear_1(y)

y = self.linear_2(y)

y = self.relu(y)

y = self.dropout(y)

y = self.linear_3(y)

y = paddle.nn.functional.sigmoid(y)

return y

尝试了自定义损失函数:

def cal_coordinate_Loss(logit, label, alpha = 0.5):

"""

logit: shape [batch, ndim]

label: shape [batch, ndim]

ndim = 2 represents coordinate_x and coordinaate_y

alpha: weight for MSELoss and 1-alpha for ED loss

return: combine MSELoss and ED Loss for x and y, shape [batch, 1]

"""

alpha = alpha

mse_loss = nn.MSELoss(reduction='mean')

mse_x = mse_loss(logit[:,0],label[:,0])

mse_y = mse_loss(logit[:,1],label[:,1])

mse_l = 0.5*(mse_x + mse_y)

# print('mse_l', mse_l)

ed_loss = []

# print(logit.shape[0])

for i in range(logit.shape[0]):

logit_tmp = logit[i,:].numpy()

label_tmp = label[i,:].numpy()

# print('cal_coordinate_loss_ed', logit_tmp, label_tmp)

ed_tmp = euclidean_distances([logit_tmp], [label_tmp])

# print('ed_tmp:', ed_tmp[0][0])

ed_loss.append(ed_tmp)

ed_l = sum(ed_loss)/len(ed_loss)

# print('ed_l', ed_l)

# print('alpha', alpha)

loss = alpha * mse_l + (1-alpha) * ed_l

# print('loss in function', loss)

return loss

class SelfDefineLoss(paddle.nn.Layer):

"""

1. 继承paddle.nn.Layer

"""

def __init__(self):

"""

2. 构造函数根据自己的实际算法需求和使用需求进行参数定义即可

"""

super(SelfDefineLoss, self).__init__()

def forward(self, input, label):

"""

3. 实现forward函数,forward在调用时会传递两个参数:input和label

- input:单个或批次训练数据经过模型前向计算输出结果

- label:单个或批次训练数据对应的标签数据

接口返回值是一个Tensor,根据自定义的逻辑加和或计算均值后的损失

"""

# 使用PaddlePaddle中相关API自定义的计算逻辑

output = cal_coordinate_Loss(input,label)

return output

(4)具体方案分享

代码参考:『深度学习7日打卡营』人脸关键点检测

解压数据集

!unzip -oq /home/aistudio/data/data116960/常规赛:PALM眼底彩照中黄斑中央凹定位.zip

!mv │г╣ц╚№г║PALM╤█╡╫▓╩╒╒╓╨╗╞░▀╓╨╤ы░╝╢и╬╗ 常规赛:PALM眼底彩照中黄斑中央凹定位

!rm -rf __MACOSX

!mv /home/aistudio/PALM眼底彩照中黄斑中央凹定位/* /home/aistudio/work/常规赛:PALM眼底彩照中黄斑中央凹定位/

查看数据标签

import blackhole.dataframe as pd

df=pd.read_excel('常规赛:PALM眼底彩照中黄斑中央凹定位/Train/Fovea_Location_train.xlsx')

df.head()

| imgName | Fovea_X | Fovea_Y | |

|---|---|---|---|

| 0 | H0001.jpg | 743.96 | 790.54 |

| 1 | H0002.jpg | 1394.82 | 725.54 |

| 2 | H0003.jpg | 1361.74 | 870.72 |

| 3 | H0004.jpg | 703.15 | 742.44 |

| 4 | H0005.jpg | 1070.95 | 1037.54 |

# 计算标签的均值和标准差,用于标签的归一化

key_pts_values = df.values[:,1:] # 取出标签信息

data_mean = key_pts_values.mean() # 计算均值

data_std = key_pts_values.std() # 计算标准差

print('标签的均值为:', data_mean)

print('标签的标准差为:', data_std)

标签的均值为: 1085.6073687500023

标签的标准差为: 183.5345073716085

数据增强

import paddle.vision.transforms.functional as F

class Resize(object):

# 将输入图像调整为指定大小

def __init__(self, output_size):

assert isinstance(output_size, (int, tuple))

self.output_size = output_size

def __call__(self, data):

image = data[0] # 获取图片

key_pts = data[1] # 获取标签

image_copy = np.copy(image)

key_pts_copy = np.copy(key_pts)

h, w = image_copy.shape[:2]

new_h, new_w = self.output_size,self.output_size

new_h, new_w = int(new_h), int(new_w)

img = F.resize(image_copy, (new_h, new_w))

# scale the pts, too

#key_pts_copy[::2] = key_pts_copy[::2] * new_w / w

#key_pts_copy[1::2] = key_pts_copy[1::2] * new_h / h

return img, key_pts_copy

class GrayNormalize(object):

# 将图片变为灰度图,并将其值放缩到[0, 1]

# 将 label 放缩到 [-1, 1] 之间

def __call__(self, data):

image = data[0] # 获取图片

key_pts = data[1] # 获取标签

image_copy = np.copy(image)

key_pts_copy = np.copy(key_pts)

# 灰度化图片

gray_scale = paddle.vision.transforms.Grayscale(num_output_channels=3)

image_copy = gray_scale(image_copy)

# 将图片值放缩到 [0, 1]

image_copy = (image_copy-127.5) / 127.5

# 将坐标点放缩到 [-1, 1]

#mean = data_mean # 获取标签均值

#std = data_std # 获取标签标准差

#key_pts_copy = (key_pts_copy - mean)/std

return image_copy, key_pts_copy

class ToCHW(object):

# 将图像的格式由HWC改为CHW

def __call__(self, data):

image = data[0]

key_pts = data[1]

transpose = T.Transpose((2, 0, 1)) # 改为CHW

image = transpose(image)

return image, key_pts

import paddle.vision.transforms as T

data_transform = T.Compose([

Resize(224),

GrayNormalize(),

ToCHW(),

])

data_transform2 = T.Compose([

Resize(224),

GrayNormalize(),

ToCHW(),

])

自定义数据集

path='常规赛:PALM眼底彩照中黄斑中央凹定位/Train/fundus_image/'

df=df.sample(frac=1)

image_list=[]

label_listx=[]

label_listy=[]

for i in range(len(df)):

image_list.append(path+df['imgName'][i])

label_listx.append(df['Fovea_X'][i])

label_listy.append(df['Fovea_Y'][i])

import os

test_path='常规赛:PALM眼底彩照中黄斑中央凹定位/PALM-Testing400-Images'

test_list=[]

test_labelx=[]

test_labely=[]

list = os.listdir(test_path) # 列出文件夹下所有的目录与文件

for i in range(0, len(list)):

path = os.path.join(test_path, list[i])

test_list.append(path)

test_labelx.append(0)

test_labely.append(0)

import paddle

import cv2

import numpy as np

class dataset(paddle.io.Dataset):

def __init__(self,img_list,label_listx,label_listy,transform=None,transform2=None,mode='train'):

self.image=img_list

self.labelx=label_listx

self.labely=label_listy

self.mode=mode

self.transform=transform

self.transform2=transform2

def load_img(self, image_path):

img=cv2.imread(image_path,1)

img=cv2.cvtColor(img,cv2.COLOR_BGR2RGB)

h,w,c=img.shape

return img,h,w

def __getitem__(self,index):

img,h,w = self.load_img(self.image[index])

labelx = self.labelx[index]/w

labely = self.labely[index]/h

img_size=img.shape

if self.transform:

if self.mode=='train':

img, label = self.transform([img, [labelx,labely]])

else:

img, label = self.transform2([img, [labelx,labely]])

label=np.array(label,dtype='float32')

img=np.array(img,dtype='float32')

return img,label

def __len__(self):

return len(self.image)

训练集、验证集、测试集

radio=0.8

train_list=image_list[:int(len(image_list)*radio)]

train_labelx=label_listx[:int(len(label_listx)*radio)]

train_labely=label_listy[:int(len(label_listy)*radio)]

val_list=image_list[int(len(image_list)*radio):]

val_labelx=label_listx[int(len(label_listx)*radio):]

val_labely=label_listy[int(len(label_listy)*radio):]

train_ds=dataset(train_list,train_labelx,train_labely,data_transform,data_transform2,'train')

val_ds=dataset(val_list,val_labelx,val_labely,data_transform,data_transform2,'valid')

test_ds=dataset(test_list,test_labelx,test_labely,data_transform,data_transform2,'test')

查看图片

import matplotlib.pyplot as plt

for i,data in enumerate(train_ds):

img,label=data

img=img.transpose([1,2,0])

print(img.shape)

plt.title(label)

plt.imshow(img)

plt.show()

if i==0:

break

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

(224, 224, 3)

模型组网

以下两个网络结构任选一

class MyNet(paddle.nn.Layer):

def __init__(self,num_classes=2):

super(MyNet,self).__init__()

self.net=paddle.vision.resnet152(pretrained=True)

self.fc1=paddle.nn.Linear(1000,512)

self.relu=paddle.nn.ReLU()

self.fc2=paddle.nn.Linear(512,num_classes)

def forward(self,inputs):

out=self.net(inputs)

out=self.fc1(out)

out=self.relu(out)

out=self.fc2(out)

return out

class MyNet(paddle.nn.Layer):

def __init__(self):

super(MyNet, self).__init__()

self.resnet = paddle.vision.resnet50(pretrained=True, num_classes=0) # remove final fc 输出为[?, 2048, 1, 1]

self.flatten = paddle.nn.Flatten()

self.linear_1 = paddle.nn.Linear(2048, 512)

self.linear_2 = paddle.nn.Linear(512, 256)

self.linear_3 = paddle.nn.Linear(256, 2)

self.relu = paddle.nn.ReLU()

self.dropout = paddle.nn.Dropout(0.2)

def forward(self, inputs):

y = self.resnet(inputs)

y = self.flatten(y)

y = self.linear_1(y)

y = self.linear_2(y)

y = self.relu(y)

y = self.dropout(y)

y = self.linear_3(y)

y = paddle.nn.functional.sigmoid(y)

return y

异步加载数据

train_loader = paddle.io.DataLoader(train_ds, places=paddle.CPUPlace(), batch_size=32, shuffle=True, num_workers=0)

val_loader = paddle.io.DataLoader(val_ds, places=paddle.CPUPlace(), batch_size=32, shuffle=False, num_workers=0)

test_loader=paddle.io.DataLoader(test_ds, places=paddle.CPUPlace(), batch_size=32, shuffle=False, num_workers=0)

自定义损失函数

from sklearn.metrics.pairwise import euclidean_distances

import paddle.nn as nn

# 损失函数

def cal_coordinate_Loss(logit, label, alpha = 0.5):

"""

logit: shape [batch, ndim]

label: shape [batch, ndim]

ndim = 2 represents coordinate_x and coordinaate_y

alpha: weight for MSELoss and 1-alpha for ED loss

return: combine MSELoss and ED Loss for x and y, shape [batch, 1]

"""

alpha = alpha

mse_loss = nn.MSELoss(reduction='mean')

mse_x = mse_loss(logit[:,0],label[:,0])

mse_y = mse_loss(logit[:,1],label[:,1])

mse_l = 0.5*(mse_x + mse_y)

# print('mse_l', mse_l)

ed_loss = []

# print(logit.shape[0])

for i in range(logit.shape[0]):

logit_tmp = logit[i,:].numpy()

label_tmp = label[i,:].numpy()

# print('cal_coordinate_loss_ed', logit_tmp, label_tmp)

ed_tmp = euclidean_distances([logit_tmp], [label_tmp])

# print('ed_tmp:', ed_tmp[0][0])

ed_loss.append(ed_tmp)

ed_l = sum(ed_loss)/len(ed_loss)

# print('ed_l', ed_l)

# print('alpha', alpha)

loss = alpha * mse_l + (1-alpha) * ed_l

# print('loss in function', loss)

return loss

class SelfDefineLoss(paddle.nn.Layer):

"""

1. 继承paddle.nn.Layer

"""

def __init__(self):

"""

2. 构造函数根据自己的实际算法需求和使用需求进行参数定义即可

"""

super(SelfDefineLoss, self).__init__()

def forward(self, input, label):

"""

3. 实现forward函数,forward在调用时会传递两个参数:input和label

- input:单个或批次训练数据经过模型前向计算输出结果

- label:单个或批次训练数据对应的标签数据

接口返回值是一个Tensor,根据自定义的逻辑加和或计算均值后的损失

"""

# 使用PaddlePaddle中相关API自定义的计算逻辑

output = cal_coordinate_Loss(input,label)

return output

模型训练与可视化

如果图片尺寸较大应适当调小Batch_size,防止爆显存。

from utils import NME

visualdl=paddle.callbacks.VisualDL(log_dir='visual_log')

#定义输入

Batch_size=32

EPOCHS=20

step_each_epoch = len(train_ds)//Batch_size

# 使用 paddle.Model 封装模型

model = paddle.Model(MyNet())

#模型加载

model.load('/home/aistudio/work/lup/final')

lr = paddle.optimizer.lr.CosineAnnealingDecay(learning_rate=1e-5,

T_max=step_each_epoch * EPOCHS)

# 定义Adam优化器

optimizer = paddle.optimizer.Adam(learning_rate=lr,

weight_decay=1e-5,

parameters=model.parameters())

# 定义SmoothL1Loss

loss =paddle.nn.SmoothL1Loss()

#loss =SelfDefineLoss()

# 使用自定义metrics

metric = NME()

model.prepare(optimizer=optimizer, loss=loss, metrics=metric)

# 训练可视化VisualDL工具的回调函数

# 启动模型全流程训练

model.fit(train_loader, # 训练数据集

val_loader, # 评估数据集

epochs=EPOCHS, # 训练的总轮次

batch_size=Batch_size, # 训练使用的批大小

save_dir="/home/aistudio/work/lup", #把模型参数、优化器参数保存至自定义的文件夹

save_freq=1, #设定每隔多少个epoch保存模型参数及优化器参数

verbose=1 , # 日志展示形式

callbacks=[visualdl]

) # 设置可视化

The loss value printed in the log is the current step, and the metric is the average value of previous steps.

Epoch 1/20

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:641: UserWarning: When training, we now always track global mean and variance.

"When training, we now always track global mean and variance.")

step 20/20 [==============================] - loss: 2.4430e-04 - nme: 0.0307 - 2s/step

save checkpoint at /home/aistudio/work/lup/0

Eval begin...

step 5/5 [==============================] - loss: 2.0670e-04 - nme: 0.0364 - 2s/step

Eval samples: 160

Epoch 2/20

step 20/20 [==============================] - loss: 2.6849e-04 - nme: 0.0280 - 2s/step

save checkpoint at /home/aistudio/work/lup/1

Eval begin...

step 5/5 [==============================] - loss: 1.7744e-04 - nme: 0.0347 - 2s/step

Eval samples: 160

Epoch 3/20

step 20/20 [==============================] - loss: 1.6573e-04 - nme: 0.0250 - 2s/step

save checkpoint at /home/aistudio/work/lup/2

Eval begin...

step 5/5 [==============================] - loss: 2.2369e-04 - nme: 0.0359 - 2s/step

Eval samples: 160

Epoch 4/20

step 20/20 [==============================] - loss: 2.4454e-04 - nme: 0.0250 - 2s/step

save checkpoint at /home/aistudio/work/lup/3

Eval begin...

step 5/5 [==============================] - loss: 2.0749e-04 - nme: 0.0345 - 2s/step

Eval samples: 160

Epoch 5/20

step 20/20 [==============================] - loss: 2.6878e-04 - nme: 0.0244 - 2s/step

save checkpoint at /home/aistudio/work/lup/4

Eval begin...

step 5/5 [==============================] - loss: 2.2863e-04 - nme: 0.0352 - 2s/step

Eval samples: 160

Epoch 6/20

step 20/20 [==============================] - loss: 2.1071e-04 - nme: 0.0224 - 2s/step

save checkpoint at /home/aistudio/work/lup/5

Eval begin...

step 5/5 [==============================] - loss: 1.7807e-04 - nme: 0.0345 - 2s/step

Eval samples: 160

Epoch 7/20

step 20/20 [==============================] - loss: 3.4471e-04 - nme: 0.0229 - 2s/step

save checkpoint at /home/aistudio/work/lup/6

Eval begin...

step 5/5 [==============================] - loss: 1.7128e-04 - nme: 0.0337 - 2s/step

Eval samples: 160

Epoch 8/20

step 20/20 [==============================] - loss: 1.9624e-04 - nme: 0.0224 - 2s/step

save checkpoint at /home/aistudio/work/lup/7

Eval begin...

step 5/5 [==============================] - loss: 1.7516e-04 - nme: 0.0339 - 2s/step

Eval samples: 160

Epoch 9/20

step 20/20 [==============================] - loss: 1.2843e-04 - nme: 0.0213 - 2s/step

save checkpoint at /home/aistudio/work/lup/8

Eval begin...

step 5/5 [==============================] - loss: 2.3785e-04 - nme: 0.0355 - 2s/step

Eval samples: 160

Epoch 10/20

step 20/20 [==============================] - loss: 2.0355e-04 - nme: 0.0207 - 2s/step

save checkpoint at /home/aistudio/work/lup/9

Eval begin...

step 5/5 [==============================] - loss: 1.8947e-04 - nme: 0.0341 - 2s/step

Eval samples: 160

Epoch 11/20

step 20/20 [==============================] - loss: 2.8434e-04 - nme: 0.0210 - 2s/step

save checkpoint at /home/aistudio/work/lup/10

Eval begin...

step 5/5 [==============================] - loss: 2.1608e-04 - nme: 0.0342 - 2s/step

Eval samples: 160

Epoch 12/20

step 20/20 [==============================] - loss: 2.5887e-04 - nme: 0.0205 - 2s/step

save checkpoint at /home/aistudio/work/lup/11

Eval begin...

step 5/5 [==============================] - loss: 1.9807e-04 - nme: 0.0343 - 2s/step

Eval samples: 160

Epoch 13/20

step 20/20 [==============================] - loss: 1.1868e-04 - nme: 0.0193 - 2s/step

save checkpoint at /home/aistudio/work/lup/12

Eval begin...

step 5/5 [==============================] - loss: 1.8711e-04 - nme: 0.0338 - 2s/step

Eval samples: 160

Epoch 14/20

step 20/20 [==============================] - loss: 1.3952e-04 - nme: 0.0196 - 2s/step

save checkpoint at /home/aistudio/work/lup/13

Eval begin...

step 5/5 [==============================] - loss: 1.8402e-04 - nme: 0.0335 - 2s/step

Eval samples: 160

Epoch 15/20

step 20/20 [==============================] - loss: 1.2068e-04 - nme: 0.0200 - 2s/step

save checkpoint at /home/aistudio/work/lup/14

Eval begin...

step 5/5 [==============================] - loss: 1.9125e-04 - nme: 0.0338 - 2s/step

Eval samples: 160

Epoch 16/20

step 20/20 [==============================] - loss: 1.9597e-04 - nme: 0.0199 - 2s/step

save checkpoint at /home/aistudio/work/lup/15

Eval begin...

step 5/5 [==============================] - loss: 1.8797e-04 - nme: 0.0336 - 2s/step

Eval samples: 160

Epoch 17/20

step 20/20 [==============================] - loss: 9.2524e-05 - nme: 0.0186 - 2s/step

save checkpoint at /home/aistudio/work/lup/16

Eval begin...

step 5/5 [==============================] - loss: 1.9081e-04 - nme: 0.0339 - 2s/step

Eval samples: 160

Epoch 18/20

step 20/20 [==============================] - loss: 1.3978e-04 - nme: 0.0190 - 2s/step

save checkpoint at /home/aistudio/work/lup/17

Eval begin...

step 5/5 [==============================] - loss: 1.9073e-04 - nme: 0.0338 - 2s/step

Eval samples: 160

Epoch 19/20

step 20/20 [==============================] - loss: 1.6930e-04 - nme: 0.0185 - 2s/step

save checkpoint at /home/aistudio/work/lup/18

Eval begin...

step 5/5 [==============================] - loss: 1.9041e-04 - nme: 0.0336 - 2s/step

Eval samples: 160

Epoch 20/20

step 20/20 [==============================] - loss: 5.3823e-05 - nme: 0.0185 - 2s/step

save checkpoint at /home/aistudio/work/lup/19

Eval begin...

step 5/5 [==============================] - loss: 1.9054e-04 - nme: 0.0337 - 2s/step

Eval samples: 160

save checkpoint at /home/aistudio/work/lup/final

模型评估

# 模型评估

model.load('/home/aistudio/work/lup/13')

result = model.evaluate(val_loader, verbose=1)

print(result)

Eval begin...

step 5/5 [==============================] - loss: 1.8402e-04 - nme: 0.0335 - 2s/step

Eval samples: 160

{'loss': [0.00018401867], 'nme': 0.033542318427836}

进行预测操作

# 进行预测操作

result = model.predict(test_loader)

Predict begin...

step 13/13 [==============================] - 2s/step

Predict samples: 400

# 获取测试图片尺寸和图片名

test_path='常规赛:PALM眼底彩照中黄斑中央凹定位/PALM-Testing400-Images'

test_size=[]

FileName=[]

for i in range(len(list)):

path = os.path.join(test_path, list[i])

img=cv2.imread(path,1)

test_size.append(img.shape)

FileName.append(list[i])

test_size=np.array(test_size)

result=np.array(result)

pred=[]

for i in range(len(result[0])):

pred.extend(result[0][i])

pred=np.array(pred)

pred = paddle.to_tensor(pred)

out=np.array(pred).reshape(-1,2)

#Fovea_X=out[:,0]*data_std+data_mean

#Fovea_Y=out[:,1]*data_std+data_mean

Fovea_X=out[:,0]

Fovea_Y=out[:,1]

Fovea_X=Fovea_X*test_size[:,1]

Fovea_Y=Fovea_Y*test_size[:,0]

submission = pd.DataFrame(data={

"FileName": FileName,

"Fovea_X": Fovea_X,

"Fovea_Y": Fovea_Y

})

submission=submission.sort_values(by='FileName')

submission.to_csv("result.csv", index=False)

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/ipykernel_launcher.py:1: VisibleDeprecationWarning: Creating an ndarray from ragged nested sequences (which is a list-or-tuple of lists-or-tuples-or ndarrays with different lengths or shapes) is deprecated. If you meant to do this, you must specify 'dtype=object' when creating the ndarray.

"""Entry point for launching an IPython kernel.

结果文件查看

submission.head()

| FileName | Fovea_X | Fovea_Y | |

|---|---|---|---|

| 358 | T0001.jpg | 1285.678868 | 988.328547 |

| 227 | T0002.jpg | 1073.538728 | 1069.430128 |

| 116 | T0003.jpg | 1067.282902 | 1032.210720 |

| 51 | T0004.jpg | 1178.123714 | 984.575601 |

| 315 | T0005.jpg | 1268.955749 | 740.036470 |

结果投票集成

简单投票集成学习,这个可以提升效果,尽量选得分高的进行投票。

在统计学和机器学习中,集成学习方法使用多种学习算法来获得比单独使用任何单独的学习算法更好的预测性能。

使用不同超参数、不同的特征,不同的结构,运行多次模型可得到不同的预测结果。在这里我使用的是简单投票法,取平均值作为最终的预测结果。 预测出多个result后,进行投票,代码如下:

import numpy as np

import blackhole.dataframe as pd

df1=pd.read_csv('result41.958.csv')

df2=pd.read_csv('result41.958.csv')

df3=pd.read_csv('result49.65447.csv')

df4=pd.read_csv('result49.75246.csv')

dfs=[df1,df2,df3,df4]

File_Name=[]

Fovea_X=[]

Fovea_Y=[]

for i in range(len(df1)):

File_Name.append(dfs[0]['FileName'][i])

avgx=(sum(np.array(dfs[x]['Fovea_X'][i]) for x in range(len(dfs))))/len(dfs)

avgy=(sum(np.array(dfs[x]['Fovea_Y'][i]) for x in range(len(dfs))))/len(dfs)

Fovea_X.append(avgx)

Fovea_Y.append(avgy)

submission = pd.DataFrame(data={

"FileName": File_Name,

"Fovea_X": Fovea_X,

"Fovea_Y":Fovea_Y

})

submission=submission.sort_values(by='FileName')

p.array(dfs[x]['Fovea_Y'][i]) for x in range(len(dfs))))/len(dfs)

Fovea_X.append(avgx)

Fovea_Y.append(avgy)

submission = pd.DataFrame(data={

"FileName": File_Name,

"Fovea_X": Fovea_X,

"Fovea_Y":Fovea_Y

})

submission=submission.sort_values(by='FileName')

submission.to_csv("result.csv", index=False)

(5)总结及改善方向

1、多试几个的预训练模型。

2、选择合适的学习率。

3、更换别的优化器。

4、投票方法能提高成绩,但是存在天花板。

5、使用自定义损失函数时经常出现“UserWarning: When training, we now always track global mean and variance.”,还没有解决。

6、曾专门用训练神经网络对中央凹可见、不可见情况进行了分类,结果测试集并未分到中央凹不可见的类别,但是总觉得测试集定位应该有(0,0),不是很确定。

(6)飞桨使用体验+给其他选手学习飞桨的建议

建议大家多参加百度AI Studio课程,多看别人写的AI Studio项目,也许会有灵感迸发,在比赛中取得更好的成绩。

(7)One More Thing

眼底彩照中黄斑中央凹定位相关论文

1.Pathological myopia classification with simultaneous lesion segmentation using deep learning

2.Detection of Pathological Myopia and Optic Disc Segmentation with Deep Convolutional Neural Networks

我在AI Studio上获得黄金等级,点亮9个徽章,来互关呀~