一、前言

在上篇文章Seata AT模式之分布式事务原理探究中,我们了解了分布式事务以及Seata AT模式下的分布式事务原理,在本文中,我们将利用SpringCloud、SpringCloud Alibaba整合Seata实现分布式事务控制,并利用Nacos作为注册中心,将Seata注册进Nacos中。

二、环境准备

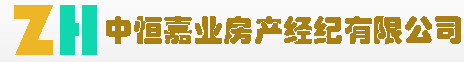

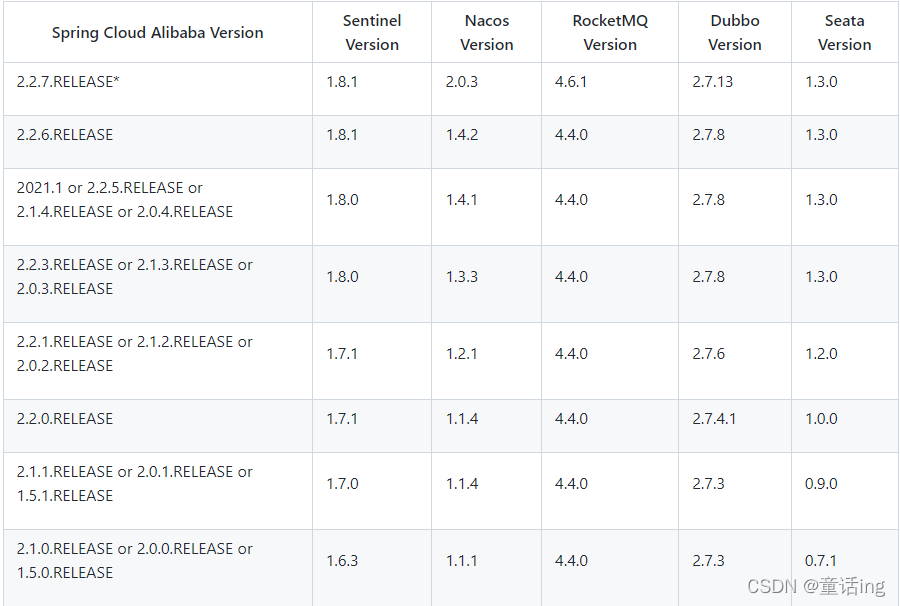

在本文中,主要包含Nacos以及Seata环境,我们先看一下阿里官方给出的各组件搭配建议。各个版本规范建议参加wiki:版本说明

下表为按时间顺序发布的 Spring Cloud Alibaba 以及对应的适配 Spring Cloud 和 Spring Boot 版本关系(由于 Spring Cloud 版本命名有调整,所以对应的 Spring Cloud Alibaba 版本号也做了对应变化)

Nacos环境之前已经准备过了,这里主要介绍一下Seata环境准备,否则Nacos又要介绍很多。Nacos作为阿里巴巴开源的一款功能强大的组件,开源到了GitHub上,读者可在Nacos官网下载进行安装准备。

在安装Nacos之前,需要确保本地有Java8和Maven环境。

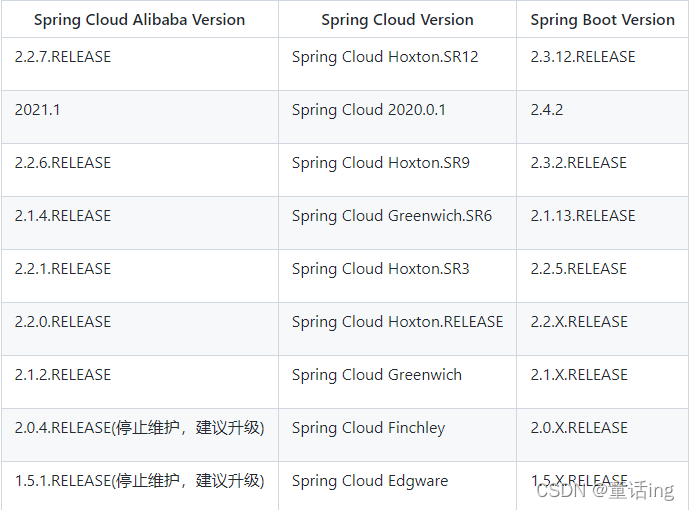

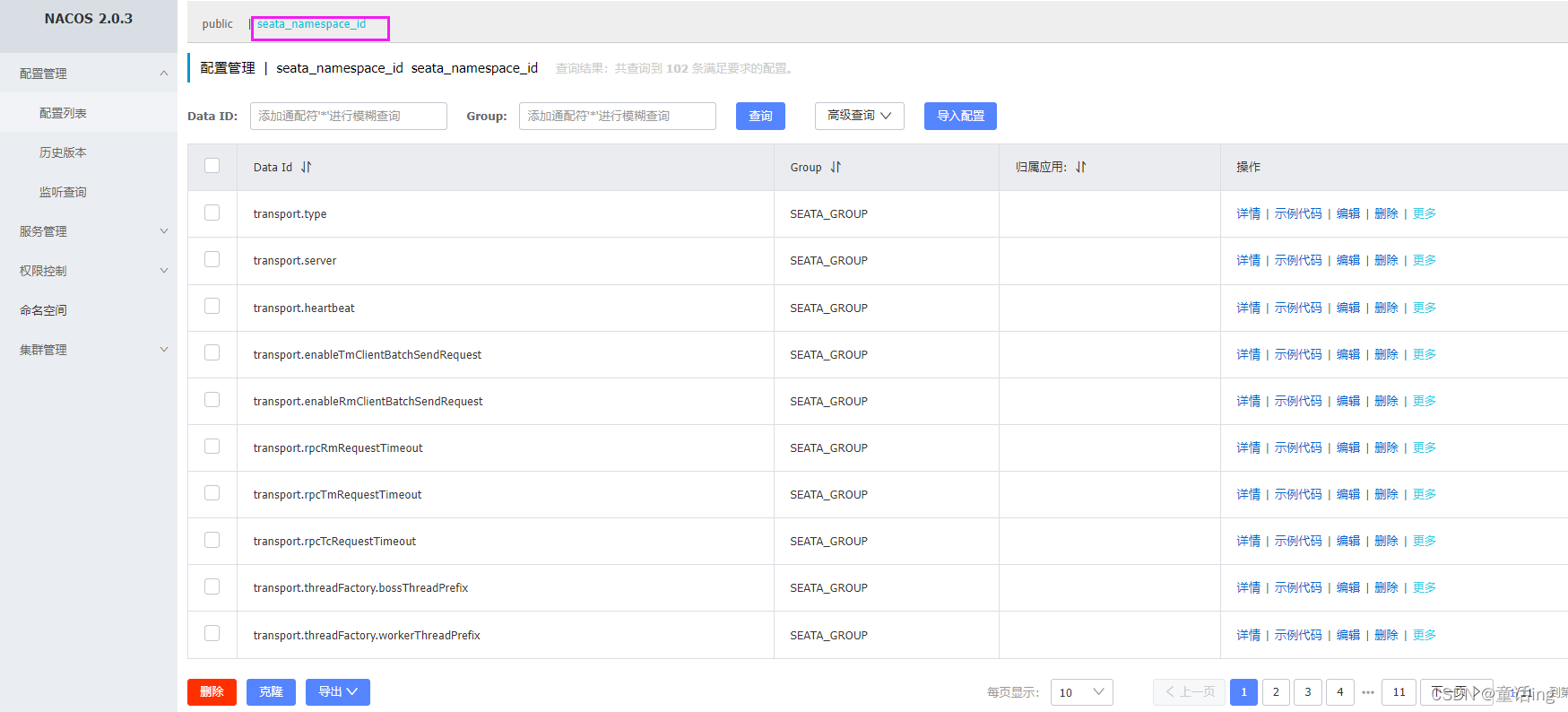

本次需要将Nacos作为注册和配置中心,因此在Nacos中创建命名空间IDseata_namespace_id备用。注意是命名空间ID,我第一次就弄成命名空间名,导致配置信息能持久化到nacos数据库,但是不能显示在Nacos控制台。

2.2 Seata Server安装

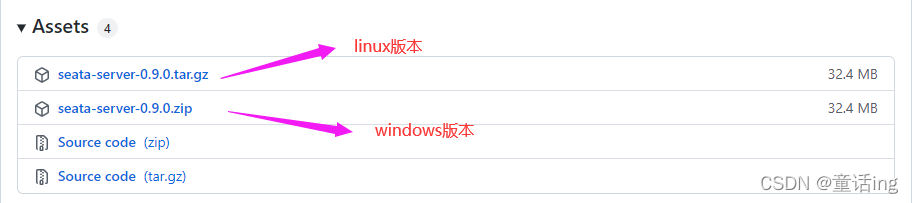

这里主要是为了和我使用的Cloud版本进行适配,因此我本次下载为0.9.0版本,Seata Server安装非常和谐,只需要在Seata官网下载Seata-Server文件解压即可,windows为zip格式,liunx为tar.gz格式。

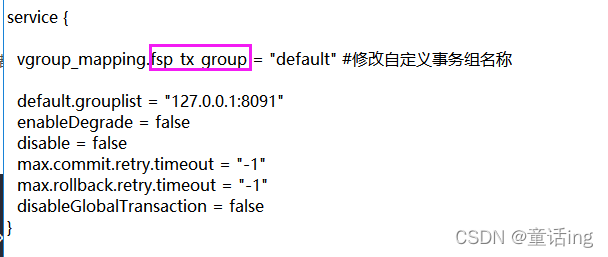

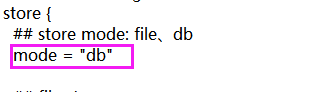

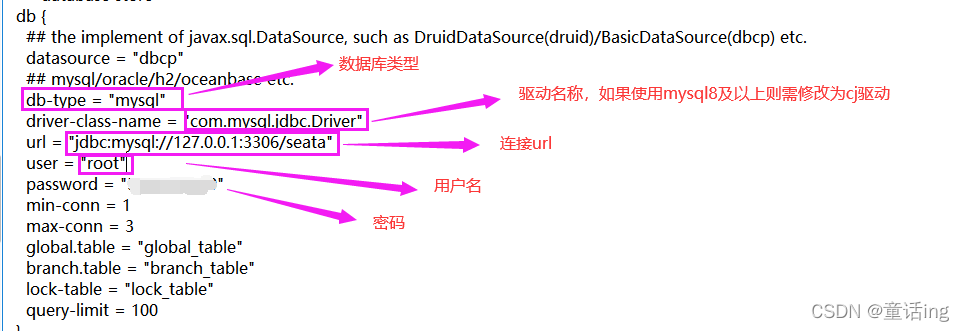

版本说明,1.0版本之后Seata的配置文件和使用已经发生了一些变化,解压之后进行配置修改,将seata-server-0.9.0.zip解压到指定目录并修改conf目录下的file.conf配置文件(配置文件目录一般为seata-server-0.9.0\seata\conf目录下的registry.conf配置文件),主要包含自定义事务组名称+事务日志存储模式为db +数据库连接信息。

0.9.0版本 file.conf

1、service模块中修改自定义事务组名称:

2、store模块中修改持久化方式为数据库db模式

3、然后在db模块中修改数据库连接信息

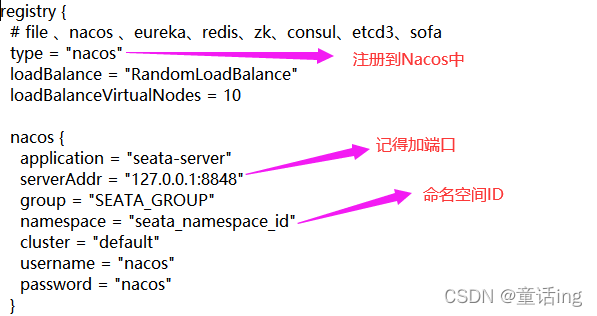

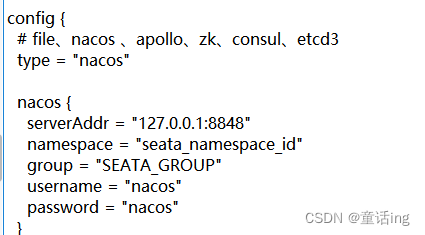

修改seata-server-0.9.0\seata\conf目录下的registry.conf配置文件,registory.conf中主要涉及到两个模块修改,一个是registry,注册类型修改为nacos,一个是config,配置我们也是用nacos作为配置中心。这里直接参照1.4.1版本的registry.conf配置0.9.0中有的属性即可。

1.4.1版本

高版本中registry中配置信息相对较全。file.conf与上面的配置一样,而registry.conf中较全的配置如下:

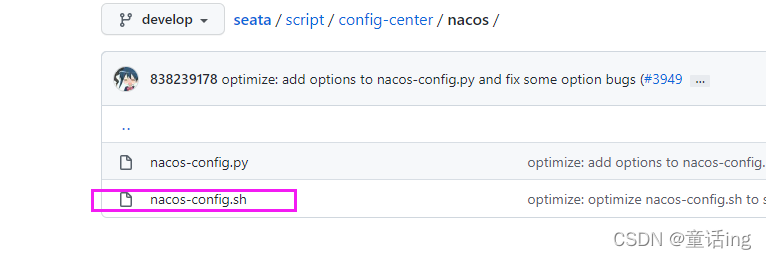

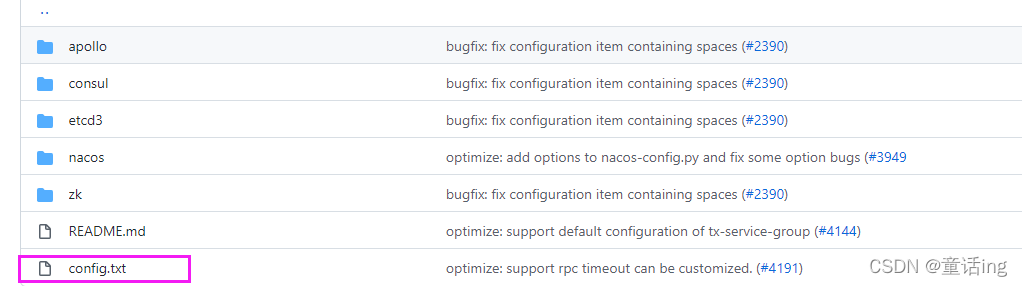

其实配置文件中信息都差异不大,最终我们想要将Nacos作为Seata的配置中心,那么我们还需要将Seata-Server本地配置信息推送至Nacos中。推送涉及到两个文件,在0.9.0版本中为nacos-config.sh和nacos-config.txt一个是推送的脚本,一个是推送的配置信息。而在1.4.1版本中没有发现这两个文件,因此直接从官网中下载进行修改。

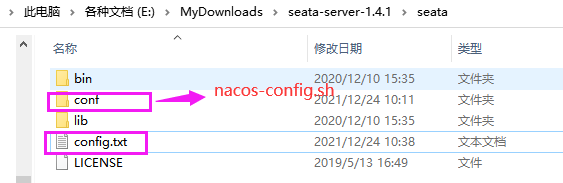

注意:nacos-config.sh脚本放在conf目录之下,而config.txt和conf文件夹同级目录。

接下来修改config.txt文件:

service.vgroupMapping.fxp_tx_group=default

store.mode=db

store.db.driverClassName=com.mysql.jdbc.Driver # mysql-con 8.0使用 com.mysql.cj.jdbc.Driver

store.db.url=jdbc:mysql://127.0.0.1:3306/seata?useUnicode=true&rewriteBatchedStatements=true

store.db.user=root

store.db.password=123456

然后在conf目录中打开git bash here执行推送命令,将config.txt配置信息推送到Nacos中:

sh nacos-config.sh -h 192.168.135.33 -p 8848 -g SEATA_GROUP -t seata_namespace_id -u nacos -w nacos

-t seata_namespace_id 指定Nacos配置命名空间ID

-g SEATA_GROUP 指定Nacos配置组名称

-u nacos 指定nacos用户名

-w nacos 指定nacos密码

官网对参数的介绍为:

-h: host, the default value is localhost.

-p: port, the default value is 8848.

-g: Configure grouping, the default value is ‘SEATA_GROUP’.

-t: Tenant information, corresponding to the namespace ID field of Nacos, the default value is ‘’.

-u: username, nacos 1.2.0+ on permission control, the default value is ‘’.

-w: password, nacos 1.2.0+ on permission control, the default value is ‘’.

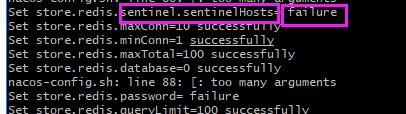

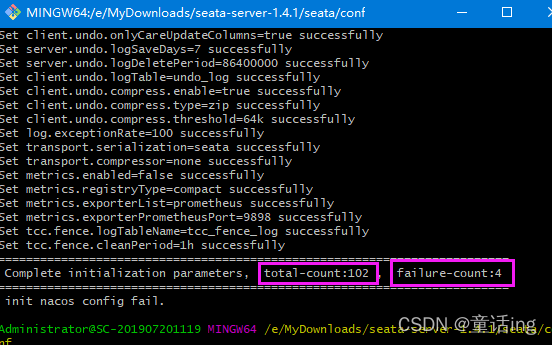

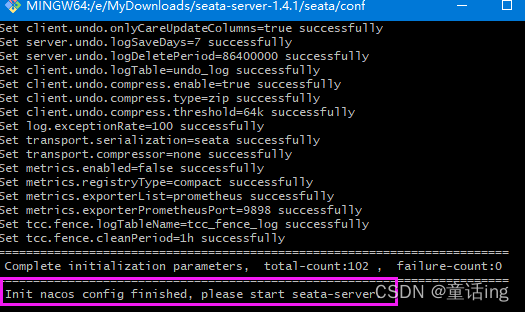

如果有 init nacos config fail报错信息,请检查修改信息,如果有属性修改提示failure,则修改config.txt中的属性,如果其中的一些属性现在用不上,可以先备份一个config.txt之后,删掉推送的配置中不需要的属性。当然,据我实验失败了也没事,只要你不需要失败的那几个配置可以接着用,如果需要那就老老实实改吧。

最终完全成功之后如下:

然后在Nacos控制台上能看到如下:

配置信息推送到Nacos中了,接下来就可以直接启动seata-server了(此前一定是启动好了Nacos的,因为Nacos即作为seata的注册中心,也是配置中心,理应先启动):

Seata库准备

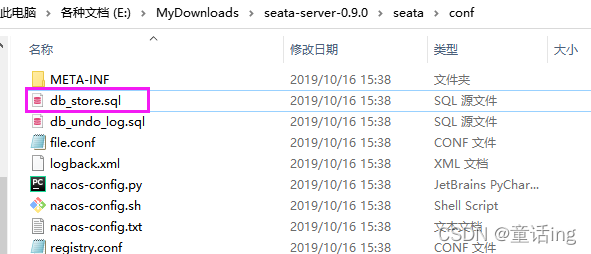

建立seata库,在seata库中建表,建表的sql脚本db_store.sql在\seata-server-0.9.0\seata\conf目录里(注:这个sql脚本在1.0版本后的seata-server是没有的,当然,没有问题也不大,我在文末准备了)

为避免篇幅,建表SQL和修改之后的两个配置文件全部信息信息放在了文末,点击此处即可跳转。执行完之后seata库中包含三张表:

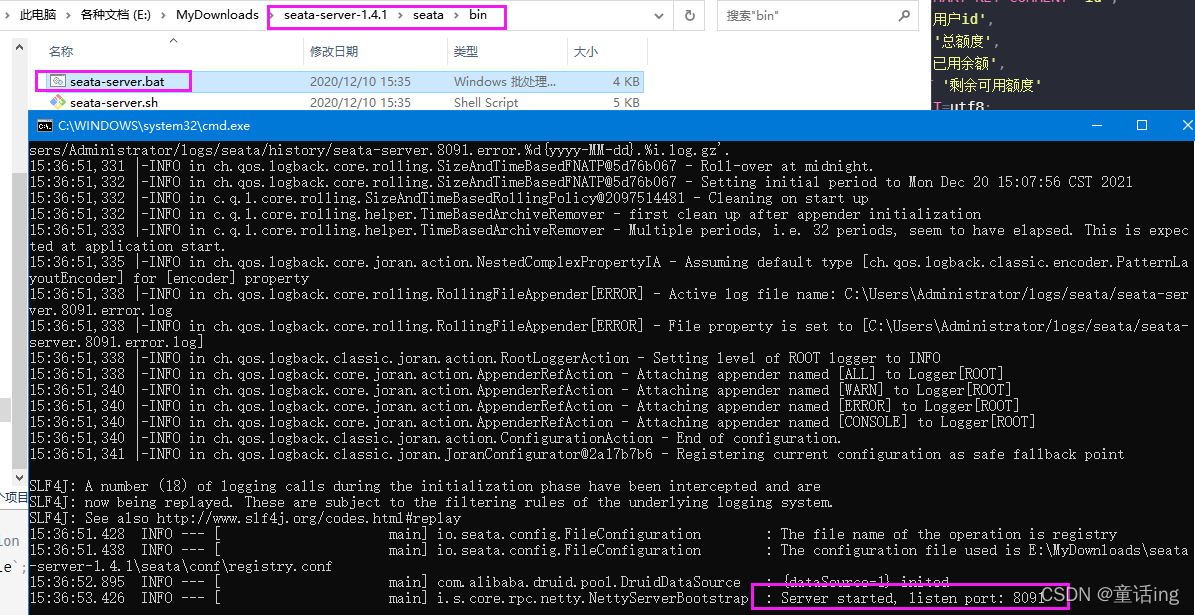

然后可以开始启动seata-server了,在seata-server-1.4.1/seata/bin找到seata-server.bat双击即可启动。

通过上面步骤基础环境seata环境和nacos环境就搭建好了,然后开始进行客户端搭建,在搭建之前先理清本次验证分布式事务采用的需求。此次验证将以用户下订单为例:下订单—>扣库存—>减账户(余额)

包含三个微服务,订单服务、库存服务、账户服务。

具体流程为:当用户下单时,会在订单服务中创建一个订单, 然后通过远程调用库存服务来扣减下单商品的库存,再通过远程调用账户服务来扣减用户账户里面的余额,最后在订单服务中修改订单状态为已完成。

该操作跨越三个数据库,有两次远程调用,很明显会有分布式事务问题。

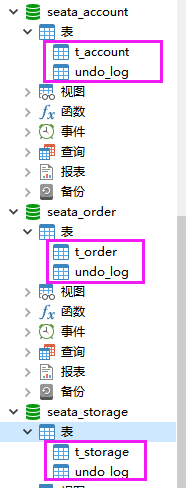

1、创建业务数据库及数据表

create database seata_order; //订单数据库;

create database seata_storage; //库存数据库;

create database seata_account; //账户信息的数据库

CREATE TABLE t_order (

`id` BIGINT(11) NOT NULL AUTO_INCREMENT PRIMARY KEY,

`user_id` BIGINT(11) DEFAULT NULL COMMENT '用户id',

`product_id` BIGINT(11) DEFAULT NULL COMMENT '产品id',

`count` INT(11) DEFAULT NULL COMMENT '数量',

`money` DECIMAL(11,0) DEFAULT NULL COMMENT '金额',

`status` INT(1) DEFAULT NULL COMMENT '订单状态: 0:创建中; 1:已完结',

) ENGINE=INNODB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE t_storage (

`id` BIGINT(11) NOT NULL AUTO_INCREMENT PRIMARY KEY,

`product_id` BIGINT(11) DEFAULT NULL COMMENT '产品id',

`total` INT(11) DEFAULT NULL COMMENT '总库存',

`used` INT(11) DEFAULT NULL COMMENT '已用库存',

`residue` INT(11) DEFAULT NULL COMMENT '剩余库存'

) ENGINE=INNODB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

INSERT INTO seata_storage.t_storage(`id`, `product_id`, `total`, `used`, `residue`)

VALUES ('1', '1', '100', '0','100');

CREATE TABLE t_account(

`id` BIGINT(11) NOT NULL AUTO_INCREMENT PRIMARY KEY COMMENT 'id',

`user_id` BIGINT(11) DEFAULT NULL COMMENT '用户id',

`total` DECIMAL(10,0) DEFAULT NULL COMMENT '总额度',

`used` DECIMAL(10,0) DEFAULT NULL COMMENT '已用余额',

`residue` DECIMAL(10,0) DEFAULT '0' COMMENT '剩余可用额度'

) ENGINE=INNODB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

INSERT INTO seata_account.t_account(`id`, `user_id`, `total`, `used`, `residue`)

VALUES ('1', '1', '1000', '0', '1000');

由于本次我们使用seata来管理分布式事务,因此按照上节的原理知识,我们还应在各分支库:订单-库存-账户3个库下都需要建各自的回滚日志表用于本地回滚。0.9.0版本的建表语句位于\seata-server-0.9.0\seata\conf目录下的db_ undo_ log.sql,1.0版本后的复制下面到三个库中执行即可。

CREATE TABLE `undo_log` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`branch_id` bigint(20) NOT NULL,

`xid` varchar(100) NOT NULL,

`context` varchar(128) NOT NULL,

`rollback_info` longblob NOT NULL,

`log_status` int(11) NOT NULL,

`log_created` datetime NOT NULL,

`log_modified` datetime NOT NULL,

`ext` varchar(100) DEFAULT NULL,

PRIMARY KEY (`id`),

UNIQUE KEY `ux_undo_log` (`xid`,`branch_id`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

最终业务库结构如下:

然后我们开始搭建客户端环境,微服务下都习惯在父pom中统一管理版本以及各个module,构建一个父工程SpringCloud,pom依赖如下:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.dl</groupId>

<artifactId>SpringCloud</artifactId>

<version>1.0-SNAPSHOT</version>

<modules>

<module>cloud-provider-payment8001</module>

<module>cloud-consumer-order80</module>

<module>cloud-api-commons</module>

<module>cloud-eureka-server7001</module>

<module>cloud-eureka-server7002</module>

<module>cloud-provider-payment8002</module>

<module>cloud-provider-payment8004</module>

<module>cloud-provider-hystrix-payment8001</module>

<module>cloud-consumer-feign-hystrix-order80</module>

<module>cloud-consumer-feign-order80</module>

<module>cloud-consumer-hystrix-dashboard9001</module>

<module>cloud-gateway-gateway9527</module>

<module>cloud-config-center-3344</module>

<module>cloud-config-client-3355</module>

<module>cloud-config-client-3366</module>

<module>cloud-stream-rabbitmq-provider8801</module>

<module>cloud-stream-rabbitmq-consumer8802</module>

<module>cloud-stream-rabbitmq-consumer8803</module>

<module>cloudalibaba-provider-payment9001</module>

<module>cloudalibaba-provider-payment9002</module>

<module>cloudalibaba-consumer-nacos-order83</module>

<module>cloudalibaba-config-nacos-client3377</module>

<module>cloud-snowflake</module>

<module>cloudalibaba-seata-order-service2001</module>

<module>cloudalibaba-seata-storage-service2002</module>

<module>cloudalibaba-seata-account-service2003</module>

</modules>

<packaging>pom</packaging>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

<junit.version>4.12</junit.version>

<lombok.version>1.18.16</lombok.version>

<mysql.version>8.0.18</mysql.version>

<druid.version>1.1.20</druid.version>

<!-- <logback.version>1.2.3</logback.version>-->

<!-- <slf4j.version>1.7.25</slf4j.version>-->

<log4j.version>1.2.17</log4j.version>

<mybatis.spring.boot.version>2.1.2</mybatis.spring.boot.version>

</properties>

<!-- 子模块继承之后,提供作用:锁定版本+子modlue不用写groupId和version -->

<dependencyManagement>

<dependencies>

<!--注意如果使用seata版本1.0spring cloud alibaba 2.1.0.RELEASE-->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-alibaba-dependencies</artifactId>

<version>2.2.2.RELEASE</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<!--spring boot 2.2.2-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-dependencies</artifactId>

<version>2.2.2.RELEASE</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<!--spring cloud Hoxton.SR1-->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>Hoxton.SR1</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<!--mysql连接-->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>${mysql.version}</version>

</dependency>

<!--druid数据源-->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

<version>${druid.version}</version>

</dependency>

<!--springboot整合mybatis-->

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

<version>${mybatis.spring.boot.version}</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>${junit.version}</version>

</dependency>

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>${log4j.version}</version>

</dependency>

<!--lombok插件-->

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>${lombok.version}</version>

<optional>true</optional>

<scope>provided</scope>

</dependency>

</dependencies>

</dependencyManagement>

<!--打包插件-->

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<configuration>

<fork>true</fork>

<addResources>true</addResources>

</configuration>

</plugin>

</plugins>

</build>

</project>

子模块主要包含:cloudalibaba-seata-order-service2001、cloudalibaba-seata-storage-service2002 、cloudalibaba-seata-account-service2003三个pom中需要的依赖都是通用的,我将整个pom贴出,各位只需要相关依赖部分即可。

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<parent>

<artifactId>SpringCloud</artifactId>

<groupId>com.dl</groupId>

<version>1.0-SNAPSHOT</version>

</parent>

<modelVersion>4.0.0</modelVersion>

<artifactId>cloudalibaba-seata-order-service2001</artifactId>

<dependencies>

<!--nacos-->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-nacos-discovery</artifactId>

</dependency>

<!--seata 0.9-->

<!-- <dependency>-->

<!-- <groupId>com.alibaba.cloud</groupId>-->

<!-- <artifactId>spring-cloud-starter-alibaba-seata</artifactId>-->

<!-- <exclusions>-->

<!-- <exclusion>-->

<!-- <artifactId>seata-all</artifactId>-->

<!-- <groupId>io.seata</groupId>-->

<!-- </exclusion>-->

<!-- </exclusions>-->

<!-- </dependency>-->

<!-- <dependency>-->

<!-- <groupId>io.seata</groupId>-->

<!-- <artifactId>seata-all</artifactId>-->

<!-- <version>0.9.0</version>-->

<!-- </dependency>-->

<!--seata 1.4.1-->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-seata</artifactId>

<exclusions>

<!-- 排除依赖 指定版本和服务器端一致 -->

<exclusion>

<groupId>io.seata</groupId>

<artifactId>seata-all</artifactId>

</exclusion>

<exclusion>

<groupId>io.seata</groupId>

<artifactId>seata-spring-boot-starter</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>io.seata</groupId>

<artifactId>seata-all</artifactId>

<version>1.4.1</version>

</dependency>

<dependency>

<groupId>io.seata</groupId>

<artifactId>seata-spring-boot-starter</artifactId>

<version>1.4.1</version>

</dependency>

<!--feign-->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-openfeign</artifactId>

</dependency>

<!--web-actuator-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<!--mysql-druid-->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.37</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid-spring-boot-starter</artifactId>

<version>1.1.10</version>

</dependency>

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

<version>2.0.0</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

</dependencies>

</project>

文末

db_store.sql的建表语句:

-- the table to store GlobalSession data

drop table if exists `global_table`;

create table `global_table` (

`xid` varchar(128) not null,

`transaction_id` bigint,

`status` tinyint not null,

`application_id` varchar(32),

`transaction_service_group` varchar(32),

`transaction_name` varchar(128),

`timeout` int,

`begin_time` bigint,

`application_data` varchar(2000),

`gmt_create` datetime,

`gmt_modified` datetime,

primary key (`xid`),

key `idx_gmt_modified_status` (`gmt_modified`, `status`),

key `idx_transaction_id` (`transaction_id`)

);

-- the table to store BranchSession data

drop table if exists `branch_table`;

create table `branch_table` (

`branch_id` bigint not null,

`xid` varchar(128) not null,

`transaction_id` bigint ,

`resource_group_id` varchar(32),

`resource_id` varchar(256) ,

`lock_key` varchar(128) ,

`branch_type` varchar(8) ,

`status` tinyint,

`client_id` varchar(64),

`application_data` varchar(2000),

`gmt_create` datetime,

`gmt_modified` datetime,

primary key (`branch_id`),

key `idx_xid` (`xid`)

);

-- the table to store lock data

drop table if exists `lock_table`;

create table `lock_table` (

`row_key` varchar(128) not null,

`xid` varchar(96),

`transaction_id` long ,

`branch_id` long,

`resource_id` varchar(256) ,

`table_name` varchar(32) ,

`pk` varchar(36) ,

`gmt_create` datetime ,

`gmt_modified` datetime,

primary key(`row_key`)

);

修改完成之后的file.conf配置信息如下:

transport {

# tcp udt unix-domain-socket

type = "TCP"

#NIO NATIVE

server = "NIO"

#enable heartbeat

heartbeat = true

#thread factory for netty

thread-factory {

boss-thread-prefix = "NettyBoss"

worker-thread-prefix = "NettyServerNIOWorker"

server-executor-thread-prefix = "NettyServerBizHandler"

share-boss-worker = false

client-selector-thread-prefix = "NettyClientSelector"

client-selector-thread-size = 1

client-worker-thread-prefix = "NettyClientWorkerThread"

# netty boss thread size,will not be used for UDT

boss-thread-size = 1

#auto default pin or 8

worker-thread-size = 8

}

shutdown {

# when destroy server, wait seconds

wait = 3

}

serialization = "seata"

compressor = "none"

}

service {

vgroup_mapping.fsp_tx_group = "default" #修改自定义事务组名称

default.grouplist = "127.0.0.1:8091"

enableDegrade = false

disable = false

max.commit.retry.timeout = "-1"

max.rollback.retry.timeout = "-1"

disableGlobalTransaction = false

}

client {

async.commit.buffer.limit = 10000

lock {

retry.internal = 10

retry.times = 30

}

report.retry.count = 5

tm.commit.retry.count = 1

tm.rollback.retry.count = 1

}

## transaction log store

store {

## store mode: file、db

mode = "db"

## file store

file {

dir = "sessionStore"

# branch session size , if exceeded first try compress lockkey, still exceeded throws exceptions

max-branch-session-size = 16384

# globe session size , if exceeded throws exceptions

max-global-session-size = 512

# file buffer size , if exceeded allocate new buffer

file-write-buffer-cache-size = 16384

# when recover batch read size

session.reload.read_size = 100

# async, sync

flush-disk-mode = async

}

## database store

db {

## the implement of javax.sql.DataSource, such as DruidDataSource(druid)/BasicDataSource(dbcp) etc.

datasource = "dbcp"

## mysql/oracle/h2/oceanbase etc.

db-type = "mysql"

driver-class-name = "com.mysql.jdbc.Driver"

url = "jdbc:mysql://127.0.0.1:3306/seata"

user = "root"

password = "5120154230"

min-conn = 1

max-conn = 3

global.table = "global_table"

branch.table = "branch_table"

lock-table = "lock_table"

query-limit = 100

}

}

lock {

## the lock store mode: local、remote

mode = "remote"

local {

## store locks in user's database

}

remote {

## store locks in the seata's server

}

}

recovery {

#schedule committing retry period in milliseconds

committing-retry-period = 1000

#schedule asyn committing retry period in milliseconds

asyn-committing-retry-period = 1000

#schedule rollbacking retry period in milliseconds

rollbacking-retry-period = 1000

#schedule timeout retry period in milliseconds

timeout-retry-period = 1000

}

transaction {

undo.data.validation = true

undo.log.serialization = "jackson"

undo.log.save.days = 7

#schedule delete expired undo_log in milliseconds

undo.log.delete.period = 86400000

undo.log.table = "undo_log"

}

## metrics settings

metrics {

enabled = false

registry-type = "compact"

# multi exporters use comma divided

exporter-list = "prometheus"

exporter-prometheus-port = 9898

}

support {

## spring

spring {

# auto proxy the DataSource bean

datasource.autoproxy = false

}

}

修改后的registory.conf如下:

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos"

loadBalance = "RandomLoadBalance"

loadBalanceVirtualNodes = 10

nacos {

application = "seata-server"

serverAddr = "127.0.0.1:8848"

group = "SEATA_GROUP"

namespace = "seata_namespace_id"

cluster = "default"

username = "nacos"

password = "nacos"

}

eureka {

serviceUrl = "http://localhost:8761/eureka"

application = "default"

weight = "1"

}

redis {

serverAddr = "localhost:6379"

db = 0

password = ""

cluster = "default"

timeout = 0

}

zk {

cluster = "default"

serverAddr = "127.0.0.1:2181"

sessionTimeout = 6000

connectTimeout = 2000

username = ""

password = ""

}

consul {

cluster = "default"

serverAddr = "127.0.0.1:8500"

}

etcd3 {

cluster = "default"

serverAddr = "http://localhost:2379"

}

sofa {

serverAddr = "127.0.0.1:9603"

application = "default"

region = "DEFAULT_ZONE"

datacenter = "DefaultDataCenter"

cluster = "default"

group = "SEATA_GROUP"

addressWaitTime = "3000"

}

file {

name = "file.conf"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3

type = "nacos"

nacos {

application = "seata-server"

serverAddr = "127.0.0.1:8848"

group = "SEATA_GROUP"

namespace = "seata_namespace_id"

cluster = "default"

username = "nacos"

password = "nacos"

}

consul {

serverAddr = "127.0.0.1:8500"

}

apollo {

appId = "seata-server"

apolloMeta = "http://192.168.1.204:8801"

namespace = "application"

apolloAccesskeySecret = ""

}

zk {

serverAddr = "127.0.0.1:2181"

sessionTimeout = 6000

connectTimeout = 2000

username = ""

password = ""

}

etcd3 {

serverAddr = "http://localhost:2379"

}

file {

name = "file.conf"

}

}